Optimized for AI & ML Workloads

At 1pbps, our high-performance infrastructure delivers the compute power needed for complex AI & machine learning applications — with unmatched speed, scalability, and efficiency.

Enterprise-Grade Security

Security is built into every layer — from hardware to network — to keep your AI models and training data protected at all times.

With DDoS protection, isolated environments, and 24/7 monitoring, 1pbps ensures peace of mind for your sensitive workloads.

NVIDIA GPU servers designed

for high-intensity workloads

We’ve selected NVIDIA GPUs for their proven ability to accelerate machine learning and AI workflows. These GPUs are optimized for training complex models and executing efficient AI inference, ensuring consistent performance for demanding applications.

NVIDIA RTX A4000 16GB

Ideal for video encoding and virtual desktop infrastructures.

With CPU: Intel® Xeon® E-2388G

RAM options: 16 GB - 128 GB

NVIDIA L4 24GB

High-performance and energy-efficient solution designed to handle video and generative AI.

With CPU: AMD EPYC™ 4464P

RAM options: 16 GB - 192 GB

NVIDIA A40 48GB

A powerful solution for deep learning, AI inference, and high-performance video encoding.

With CPU: AMD EPYC™ 7443P

RAM options: 16 GB - 128 GB

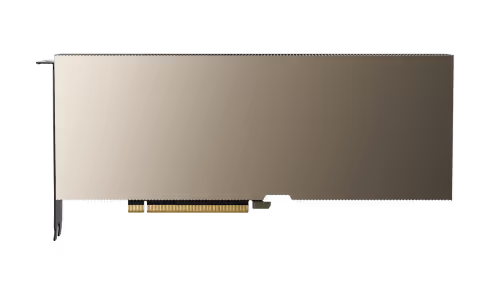

NVIDIA L40S 48GB

A high-performance GPU for AI inference, rendering, and complex workloads.

With CPU: AMD EPYC™ 7443P

RAM options: 128 GB - 512 GB

NVIDIA A100 80GB

The market standard for AI and ML projects, large models and accelerated AI training.

With CPU: AMD EPYC™

RAM options: 128 GB - 512 GB

Why choose 1pbps for AI Infrastructure?

Reliable, scalable, and high-performance infrastructure designed specifically for AI and machine learning workloads.

Launch and manage your AI workloads effortlessly through our powerful and intuitive control panel.

From hardware provisioning to software tuning — we handle everything so you can focus purely on building models.

High-speed SSD storage, NVIDIA GPUs, and low-latency network — built for demanding AI workloads.

F.A.Q. – Dedicated Servers for AI & Machine Learning

Get quick answers to common questions about our high-performance infrastructure built for AI workloads.

-

Our servers are equipped with the latest GPUs, high-memory configurations, and NVMe storage to handle training and inference at scale.

We ensure low-latency networking and optimized infrastructure for frameworks like PyTorch, TensorFlow, and JAX.

-

Yes, we offer fully customizable configurations — choose the number of GPUs, memory size, storage type, and networking bandwidth based on your model requirements.

You can also consult our team to optimize for specific AI tasks like training LLMs or computer vision models.

-

Absolutely. We support horizontal scaling with private networking, load balancers, and orchestration tools like Kubernetes or Slurm.

Our team can also assist in deploying your infrastructure across multiple locations for better availability and speed.

-

Yes, we offer fully managed services — including OS setup, driver installation (CUDA, cuDNN), monitoring, and security updates.

You focus on model development while we handle the infrastructure backend.